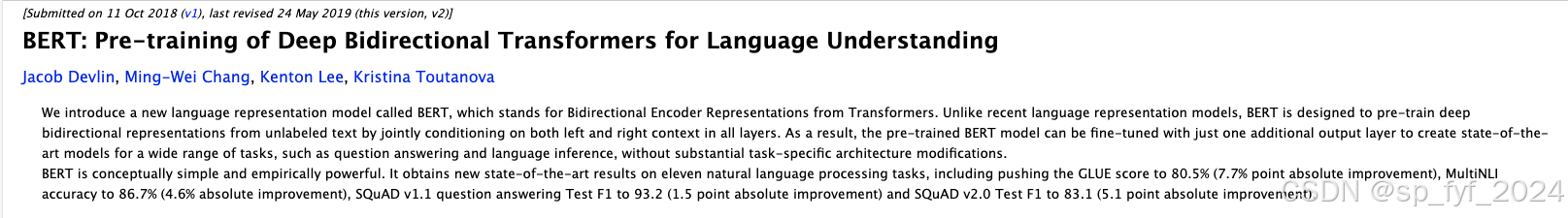

【大语言模型-论文精读】谷歌-BERT:用于语言理解的预训练深度双向Transformers

0. 引言 @article{devlin2018bert, title={Bert: Pre-training of deep bidirectional transformers for language understanding}, author={Devlin, Jac...

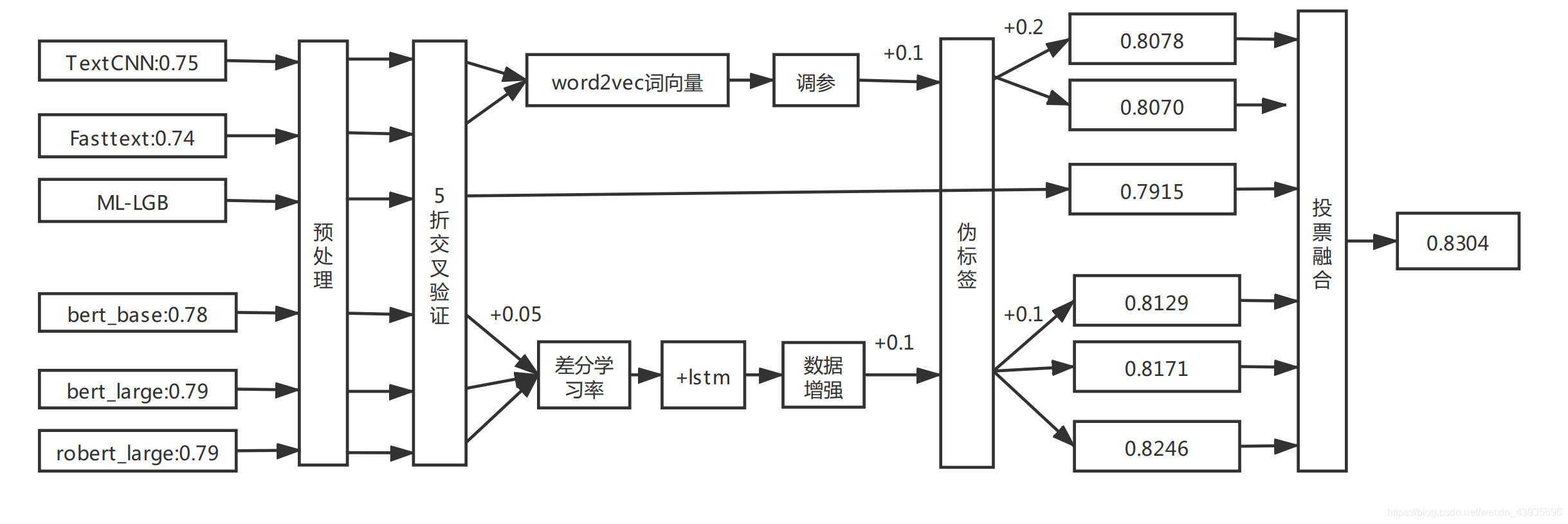

【NLP】讯飞英文学术论文分类挑战赛Top10开源多方案–5 Bert 方案

1 相关信息 【NLP】讯飞英文学术论文分类挑战赛Top10开源多方案–1 赛后总结与分析【NLP】讯飞英文学术论文分类挑战赛Top10开源多方案–2 数据分析【NLP】讯飞英文学术论文分类挑战赛Top10开源多方案–3 TextCNN Fasttext 方案【NLP】讯飞英文学术论文分类挑战赛Top10开源多方案–4 机器学习LGB 方案【NLP】讯飞英文学术论文分类挑战赛Top10开源多方案....

[RoBERTa]论文实现:RoBERTa: A Robustly Optimized BERT Pretraining Approach

论文:RoBERTa:A Robustly Optimized BERT Pretraining Approach 作者:Yinhan Liu, Myle Ott, Naman Goyal, Jingfei Du, Mandar Joshi, Danqi Chen, Omer Levy, Mike Lewis, Luke Zettlemoyer, Veselin Stoyan...

![[RoBERTa]论文实现:RoBERTa: A Robustly Optimized BERT Pretraining Approach](https://ucc.alicdn.com/pic/developer-ecology/w2a72w4omzoyy_2554cc1d71a14bdbb5ecee71b0325baa.png)

[DistilBERT]论文实现:DistilBERT:a distilled version of BERT: smaller, faster, cheaper and lighter

论文:DistilBERT, a distilled version of BERT: smaller, faster, cheaper and lighter 作者:Victor Sanh, Lysandre Debut, Julien Chaumond, Thomas Wolf 时间:2020 地址:https://github.com/hugging...

![[DistilBERT]论文实现:DistilBERT:a distilled version of BERT: smaller, faster, cheaper and lighter](https://ucc.alicdn.com/pic/developer-ecology/w2a72w4omzoyy_5e399730252a474ebd1a6264ecc1998e.png)

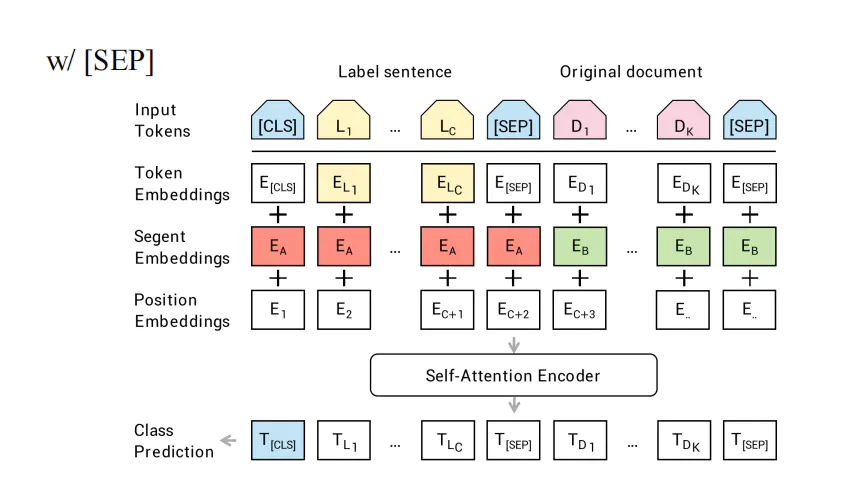

【论文笔记】融合标签向量到BERT:对文本分类进行改进

论文简介:融合标签嵌入到BERT:对文本分类进行有效改进论文标题:Fusing Label Embedding into BERT: An Efficient Improvement for Text Classification论文链接:https://aclanthology.org/2021.findings-acl.152.pdf论文作者:{Yijin Xiong etc.}论文摘要随着....

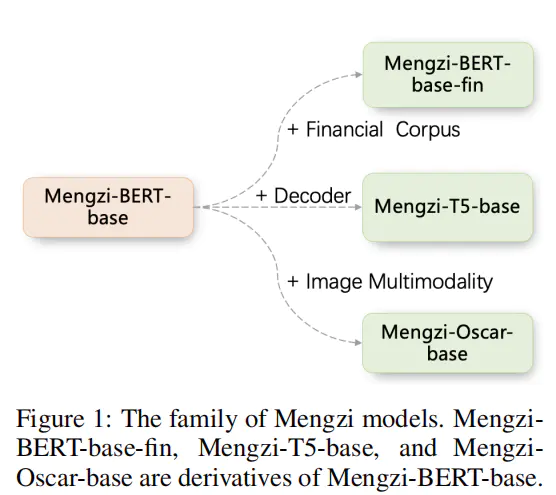

【论文笔记】当Bert炼丹不是玄学而是哲学:Mengzi模型

论文标题:Mengzi: Towards Lightweight yet Ingenious Pre-trained Models for Chinese论文链接:https://arxiv.org/pdf/2110.06696.pdf论文代码:https://github.com/Langboat/Mengzi论文作者:{Zhuosheng Zhang etc.}今年七月,澜舟科技推出的孟子模....

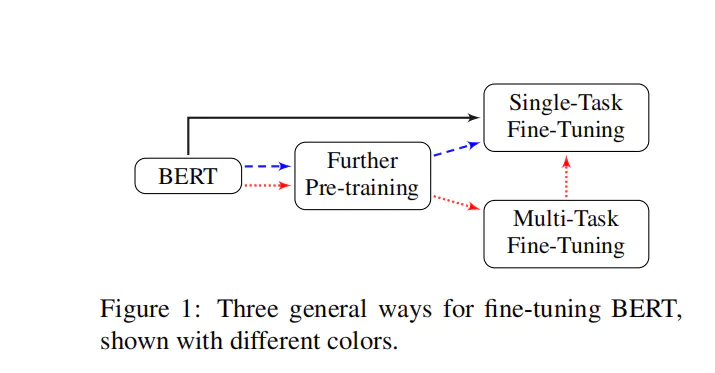

【论文解读】文本分类上分利器:Bert微调trick大全

论文标题:How to Fine-Tune BERT for Text Classification?中文标题:如何微调 BERT 进行文本分类?论文作者:复旦大学邱锡鹏老师课题组实验代码:https://github.com/xuyige/BERT4doc-Classification前言大家现在打比赛对预训练模型非常喜爱,基本上作为NLP比赛基线首选(图像分类也有预训练模型)。预训练模型虽然....

本页面内关键词为智能算法引擎基于机器学习所生成,如有任何问题,可在页面下方点击"联系我们"与我们沟通。